Event-Driven Architecture is: The Only Way to Make Data a First-Class Citizen

- Petyo Pahunchev

- Eda , Data

- September 22, 2024

Table of Contents

As a consultant, I’ve witnessed a recurring theme across many projects: data platforms are often built backwards. 🤯

We’ve all heard the saying that “data is the new oil,” and while this analogy isn’t entirely wrong, we tend to treat data the same way we treat oil. We extract it, store it, and then process it to realise its value later on. 🛢️ But this delayed process brings its own set of challenges and inefficiencies, especially in today’s world, where real-time data is key to staying competitive.

The Problems with Current Data Architectures

Here are three common problems with the traditional data platform approach:

1. Timeliness of Insights ⏰

One of the biggest issues with the traditional data extraction and processing pipeline is the delay in realising insights. Data is collected, stored, and only processed later, often in batch jobs run overnight or on a scheduled basis.

For many real-time data use cases, this is simply too slow. By the time the insights are generated, they may no longer be actionable, or worse, they may have already expired. In these situations, running dbt pipelines frequently enough to meet real-time demands is nearly impossible. For businesses that rely on immediate insights—think fraud detection, real-time personalisation, or dynamic pricing—this delay can be crippling.

2. Fragility of Data Pipelines 💻

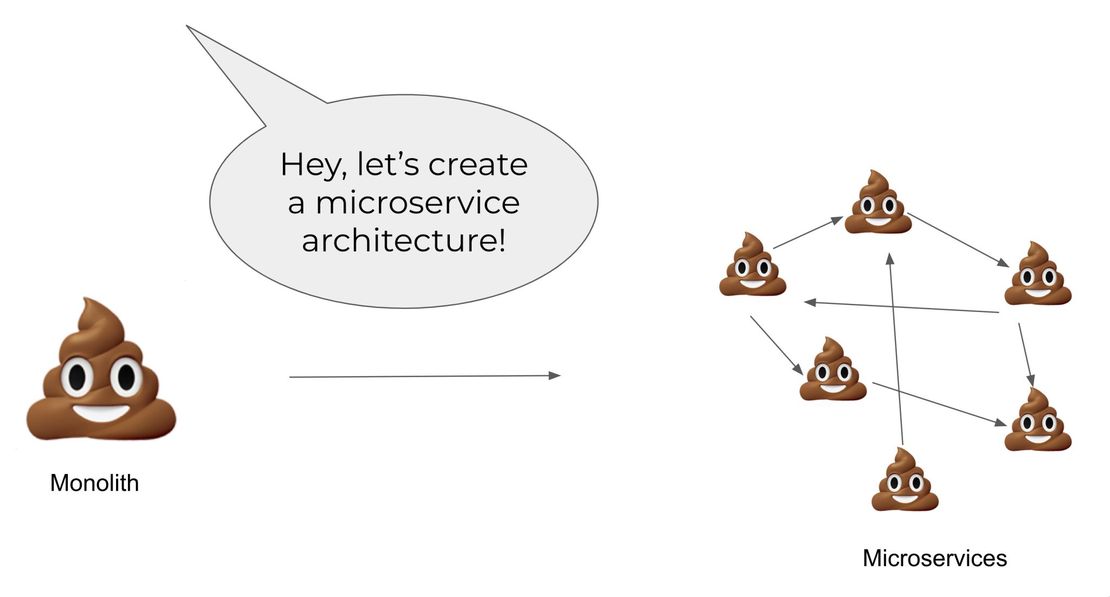

Traditional data platforms often involve extracting data from operational systems, which creates tight coupling between the platform and those systems. This coupling leads to fragility, as internal data models within operational systems are meant to evolve over time as implementations change.

Unfortunately, when these models change, they often break downstream data pipelines, leaving data engineers scrambling to fix issues. It’s not uncommon to see teams working late into the night or on weekends, simply to resolve these pipeline problems. These breakdowns can slow down operations and reduce the trust placed in the data platform.

3. Misuse of Data Platforms for Operational Use Cases 🤔

Another issue arises when data platforms, initially designed for analytical purposes, are used for operational tasks. Because data platforms store all sorts of data, they sometimes become an escape hatch for poorly built operational systems.

For example, you might see operational reports built on top of a data platform’s PCI data (data sensitive to credit card transactions). The justification is often that the data platform is the only place where “all the data” is available in one place. However, this misuse can lead to governance, security, and performance problems, as the platform was never meant to handle such operational loads.

The Solution: Event-Driven Architectures 🚀

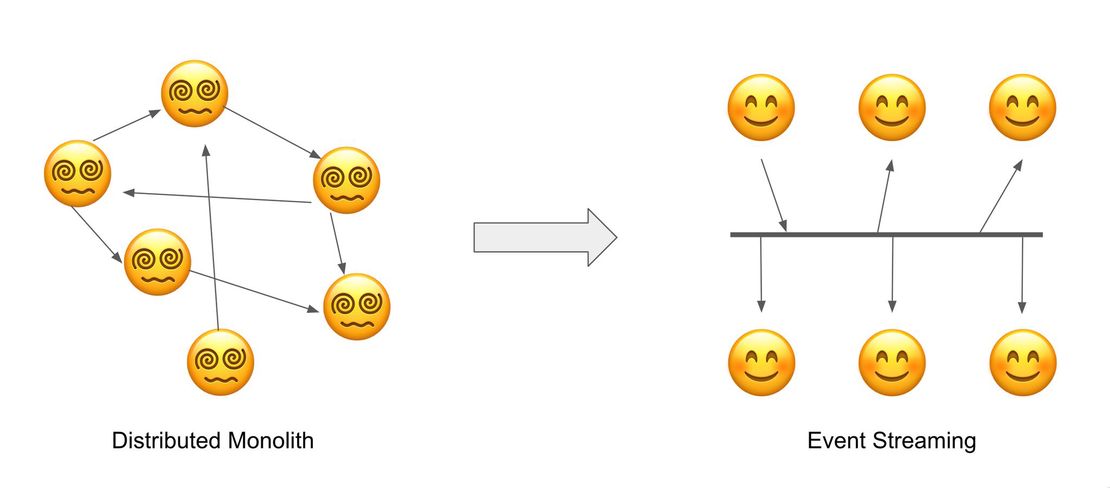

To address these challenges, there needs to be a paradigm shift away from traditional data platforms towards event-driven architectures (EDA). EDAs offer a way to decouple systems and create a more agile, scalable, and robust data ecosystem.

Why Event-Driven Architectures? 🎉

Event-driven architectures focus on the production, distribution, processing, and storage of business events. Events are high-quality data that carry business meaning, and most importantly, they remain unchanged even as the internal workings of systems evolve.

In contrast to traditional systems that rely on request/response models to maintain state, an EDA continuously captures business events in real time and streams them across the platform. This approach offers several critical benefits:

- Timeliness: Events are captured and processed as they happen, providing real-time insights without the delays inherent in batch processing.

- Decoupling: Events decouple system components, meaning that as internal systems evolve, the overall architecture remains resilient and less prone to breaking.

- Operational and Analytical Synergy: By capturing events at the point of occurrence, you can seamlessly integrate operational and analytical use cases, enabling immediate insights and actions while keeping your data platform’s analytical focus intact.

Why Events Are First-Class Citizens in EDA 🌐

At the heart of event-driven architecture is the notion that events are the fundamental building blocks of the system. Unlike traditional architectures, where data is stored and processed later, EDAs treat events as first-class citizens that drive the system forward.

What Makes Events Superior?

- Business Meaning: Each event represents a meaningful occurrence in the business, such as a customer making a purchase, a shipment being dispatched, or a user interacting with an application. This makes the data actionable in real time.

- Immutability: Once an event occurs, it doesn’t change. This stability ensures that downstream systems don’t break due to internal changes in how events are generated or stored.

- Scalability: EDAs naturally support scalability because they decouple the producer and consumer of events, allowing each to scale independently based on demand.

How to Transition to an Event-Driven Architecture

Transitioning to an EDA requires a shift in mindset as well as technical implementation. Here are a few key steps to guide the process:

Identify Critical Business Events: Start by identifying the key events in your business processes—those that drive meaningful decisions and actions. These will become the backbone of your event-driven architecture.

Build a Robust Event Streaming Platform: Tools like Apache Kafka are perfect for streaming, storing, and distributing events in real-time. Kafka allows for massive scalability while ensuring that all events are captured and processed with minimal latency.

Decouple Systems: As you move towards an EDA, focus on decoupling your system components, ensuring that each service can produce and consume events independently of one another. This will reduce fragility and make your system more resilient to change.

Use Events for Both Operational and Analytical Use Cases: By using events to drive real-time analytics and business operations, you can ensure that your platform delivers timely, actionable insights across the board. This synergy will enable you to get the most out of your data platform.

Conclusion

If you want to ensure timeliness, avoid pipeline fragility, and use data platforms for their intended purpose, adopting an event-driven architecture is the way forward. It enables real-time processing, decouples your systems, and ensures that your data is always a first-class citizen.

It’s time to embrace the future of data platforms and make event-driven architecture the backbone of your next project. 💪

Takeaway: Event-driven architectures are the key to ensuring timely insights, reducing pipeline fragility, and building platforms that seamlessly support both operational and analytical use cases. Treat events as first-class citizens and make data work for you in real-time.